Fatigue – that’s a word you hear being thrown about a lot at endurance racing events. We know that as drivers do long stints behind the wheel, fatigue starts setting in and the lap times start to drop steadily. But the $64,000 question is how long can a driver stay out on track without compromising the performance of his/her car or the safety of the other competitors. People keep throwing around the statistic of F1 drivers having an average heart rate of 170 bpm for the 90 odd minute duration of the race. That data might be useful for a young fit F1 driver who spends the entire duration of the race on the ragged edge, but is not very relevant to the average Lemons driver who is generally older, considerably less fit, often drives longer stints and (speaking for myself) is generally well under the limit.… Read the rest

Category: research

My first magazine article just came out in IEEE Multimedia:

ZoneTag’s Collaborative Tag Suggestions: What is This Person Doing in My Phone?

Mor Naaman and Rahul Nair

Yahoo!

A huge thanks to my co-author Mor for both the paper and the title of this blog post :-).… Read the rest

Helping organize the Mobile Spatial Interaction (MSI) workshop at CHI 2007 has made me very sensitive to the usage of the term MSI. Most people see Mobile and Spatial attached to Interaction and immediately start talking about location aware applications and services. However as someone who has spent the last few years working on location-aware applications, I have always felt that MSI and location-aware apps are two slightly different classes of research. As a result I’ve been spending some time thinking about how to define MSI as well as understanding how it differs (if it differs at all) from the current of location aware applications.… Read the rest

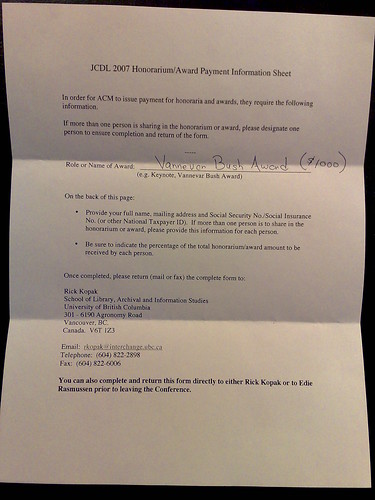

Our paper titled “World Explorer: Visualizing Aggregate Data from Unstructured Text in Geo-Referenced Collections” just won the Vannevar Bush Best Paper Award at JDCL 2007. A big thanks to my co-authors Shane Ahern, Mor Naaman and Jeannie Yang for all their help in both building the system and writing the paper – it was a great joint effort. You can read the paper, see the demo or look at my slides below.

Judith Bush has a report about my presentation as well.… Read the rest

The following is the abstract of a talk I will be giving at the Ricoh California Research Center on Monday (11-Jun-07)

What’s in a place: Using geotagged images to explore the world

Can we automatically create an “attraction map” of the world from Flickr geotagged images and their associated tags? We performed an analysis of Flickr data and developed a visualization technique called Tag Maps to do exactly that. Using the analysis and the Tag Maps visualization, we created an exploration tool called World Explorer that allows one to, well, explore the world like never before.

The idea behind the data analysis is simple: by taking a photo, photographers essentially express their interest in a particular place, and implicitly “vote†in favor of that location.… Read the rest